licence Plate Detection

This project works on Automatic License plate recognition (ALPR)

by building and training a CNN model using Tensorflow library

License plate detection and recognition stand at the forefront of image processing and computer vision, revolutionizing applications in our daily lives. From unattended parking lots to security control and automatic toll collection, the impact of this technology is undeniable. In this blog post, we embark on a journey to develop a license plate detection model using the Python library Tensorflow, this work was part of my internship at CERIST, an Algerian Information Technology Research laboratory.

Environment Setup:

Our first step involves setting up the environment in Google Colab. We install the necessary dependencies, including OpenCV for image processing, OS, glob, lxml, TensorFlow for deep learning, and pytesseract for OCR capabilities.

!pip install opencv-python

!pip install tensorflow

!pip install pytesseract Download the data:

We obtain our dataset from Kaggle, specifically the 'Car Plates OCR' dataset, comprising over 28.8k images of vehicles. To streamline our model training, we select a subset of high-quality images (around 8k-10k depending on your compute power) and organize them in a separate folder. Annotations for these images are extracted from a JSON file, providing details like image name, annotations, and license plate information.

Data exploration

We explore the dataset, filtering out images with low resolution or other issues that might hinder model training. Visualization of bounding box (bbox) annotations helps us understand the spatial information associated with license plates.

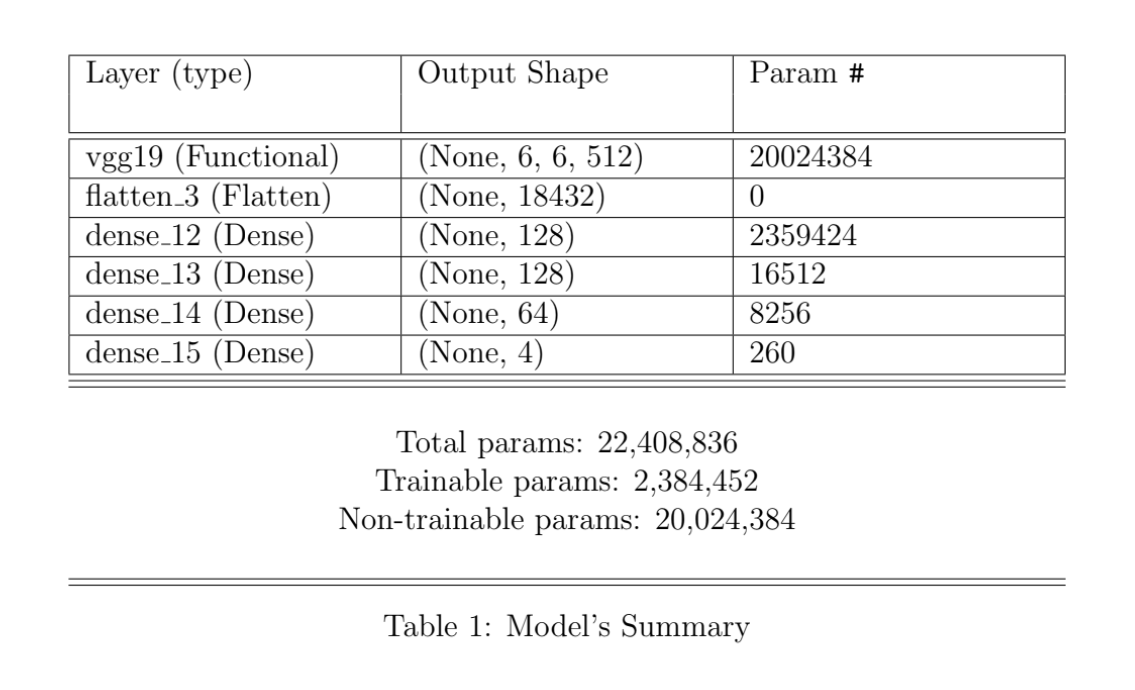

Setting up/Building the model

Note: it is important when doing machine learning projects, to split your data into two sets, a training

set which has a size of around (70-90) % of available data. And leave (10-20)% for the testing part

(test set).

Using Tensorflow vast library of functions, and it's high level keras API, we can easily create

a CNN model, loaded with some pre-trained weights10, that will be further adjusted in the ”real”

training of the model:

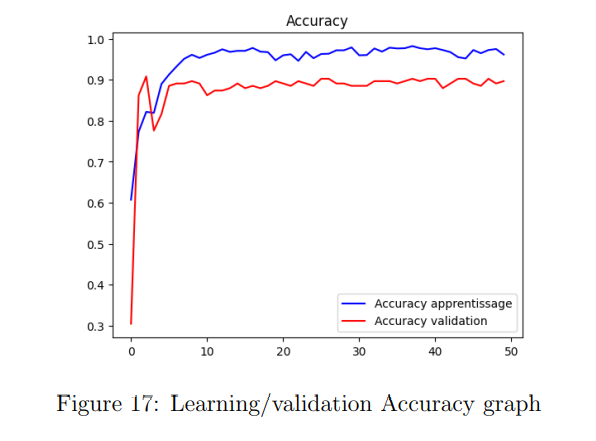

Training and Evaluation:

With the model architecture in place, we commence the training process. The duration of training depends on factors like data size, hardware capabilities, and more. After training, we evaluate the model's performance using test data, gauging its effectiveness in license plate detection.

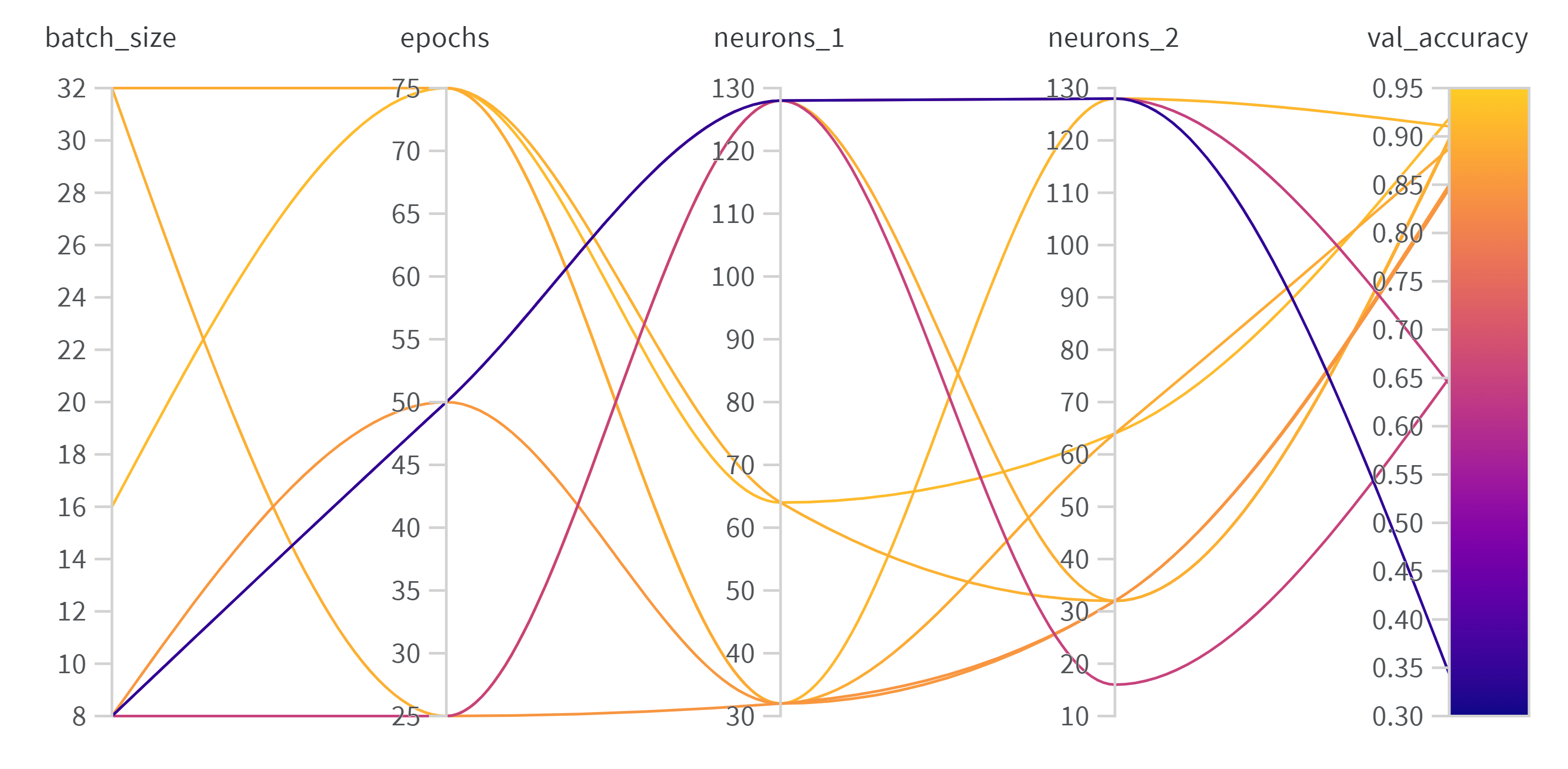

Hyperparameter Tuning with Weights and Biases

In our license plate detection project, hyperparameter tuning plays a crucial role in optimizing our Convolutional Neural Network (CNN). By fine-tuning batch size, epochs, and neurons in dense layers, we enhance model accuracy and efficiency. Leveraging the Weights and Biases library, we track these hyperparameters for a comprehensive understanding of our model's behavior.

- Batch Size: Number of samples processed per training iteration.

- Number of Epochs: Total passes of the dataset during training.

- Neurons in Dense Layers: Tuning the architecture for optimal performance.

Conclusion

In this project, we developed a license plate detection method based on a Deep Convolutional Neural Network (VGG19). The utilization of pre-trained weights from the VGG19 network accelerates the training process while ensuring robust performance under various visual conditions